Project Background

This project will build upon the work completed in Data Warehouse Project. This project will take that baseline work and build an automated ELT process using Apache Airflow. This project used the baseline python sql scripts written earlier and the database schemas already developed and focused on building the workflow using Airflow

The GitHub for this project is located here: Data-Pipeline-With-Airflow

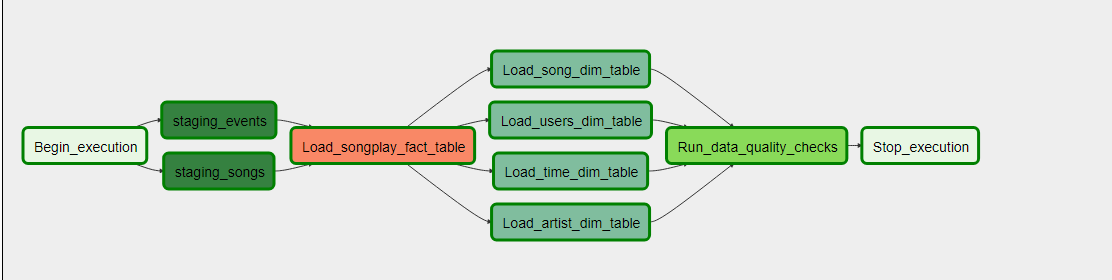

Designing the DAG

The DAG is a Directed Acyclic Graph. Generally speaking this is a map of the work and processes that need to take place. Work can branch off and even happen in parallel. The important part is that work never goes backwards.

- In the first stage the data will be copied from the s3 buckets to the staging tables.

- Staging Events: For this portion data was copied from the log s3 bucket into the staging table. This portion was a bit difficult to format correctly as the log JSON is a JSON-line file. Airflow must have a configuration listing this specifically.

- Staging Songs: Data was copied from the Song s3 bucket into the songs staging table. This data was not in JSON-lines format and just JSON.

- Load Songplay Fact Table: As the songplay fact table is the central table in the final schema, this table is loaded first, because it is important to know early if this insert fails. It could be done with the dimension tables, but this way ensures the inserts are conducted without an error.

- The dimension tables were loaded in parallel.

- Load Song Dimension Table

- Load Users Dimension Table

- Load Time Dimension Table

- Load Artist Dimension Table

- Run Data Quality Checks:The last step was to run a basic count query against the tables to ensure they had been loaded with data.

Lessons Learned/Final Thoughts

This was perhaps one of my favorite projects. This project went beyond just writing table creation statements and insert queries, but rather focused on how to make this type of data movement sustainable. While running a Python ETL script manually once a day is not difficult or time consuming, it is a process that can easily be automated. By using a product such as airflow it is easy to not only automate the process but also monitor the pipeline for issues.

While the project itself was not difficult, it was important to learn the concepts and how to implement a sustainable process into Airflow.